This post

is inspired from a post by Steve Eddins, who works in Math works, a company that build

MATLAB. He is a software development manager in the MATLAB and one of the co-author of a book " Digitial image processing with MATLAB".I use both MATLAB and eCognition, so I ponder if this can be done in

eCognition. eCognition has basic Morphological Operators like dilation and

erosion . Advance MM operators like by opening by reconstruction, connected component labeling or skeleton

and many others are not available in eCognition.

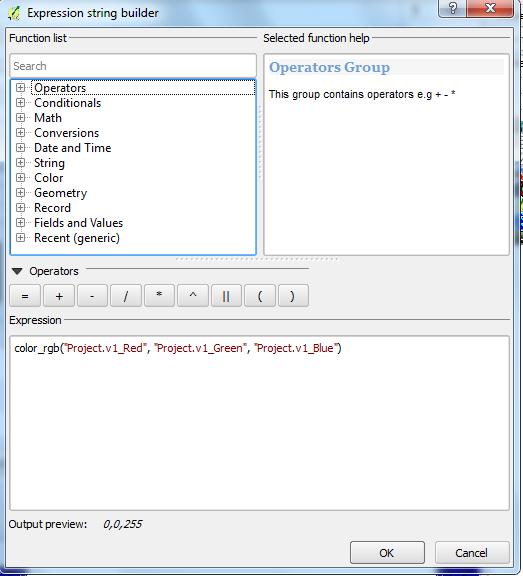

The

problem of almost connected components:

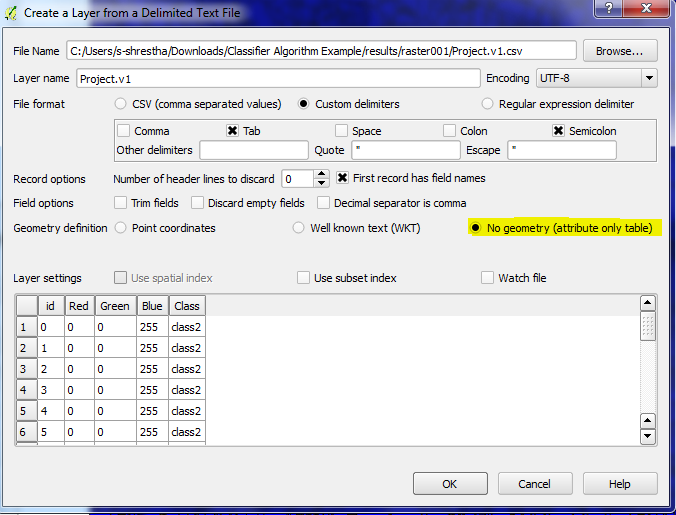

There is

simple synthetic image containing a number of circular blobs. How

can we label and measure the three clumps instead of the smaller circles?

Two circles are almost connected if circles are within 25 pixels unit.

|

| Binary circles |

|

| Connected components labeling |

|

| Almost connected components labeling |

Of course it can be solved in eCognition. But for this, you have to be familiar

with many concepts in eCognition. Concepts such as PPO, object variables, multi-level

representation and temporary layers are required. My workflow for the solution is as follows:

- Use multi-threshold segmentation to get circles

- Use distance map algorithm to get binary distance map

- Use chessboard segmentation to get pixel level unclassified objects

- Use multi-thresholding segmentation based on the distance map to get clump

- Copy level above

- Use object variable concept to assign each clump a unique ID

- Convert to sub-objects to get original circle at upper level

I will post rule-set after some time. I have given you enough hint how to proceed. Get your hands dirty !

|

| Almost connected components labelling within eCognition |

The concept

of “almost connected components” can be applicable in remote sensing for

clustering buildings detected in remote sensing images for analyzing of micro-climate

of urban areas. There can be various other applications. Can you think of any ?